My work here is inspired by some of Jean-Claude Risset's work with beats as a form of musical expression. In this particular project, I set out to use the concept of beating frequencies to compose music. The idea is to add together many cosine waves that are very close together in frequency for each note in a song, such that at particular points in time, that note will stand out (all of the adjacent frequencies in a note will come together; for instance, a 440.0hz, 440.1hz, and 440.2hz signal will have two beats every 10 seconds, one between the 440hz and 440.1hz cosines, and one between the 440.1hz and the 440.2hz). What makes this unique is that all the program needs to do is determine the sine waves right at the beginning, and then just let them go for all time without changing anything (the system is completely time-invariant). The song should repeat infinitely, with different notes standing out chosen ahead of time.

I'm doing this because it seems like a neat way to make music; it relies solely on beats, which I expect will give rise to a unique timbre. Also, the whole thing is time-invariant (which seems cool to me since, intuitively, most songs seem hightly time-varying). The challenge will be to determine the phases for each sine wave and the number of sine waves needed such that each note will only "play" once at the correct time in a chosen interval, and so that the attack on each note will be distinct (and the notes won't just blur together all over the place).

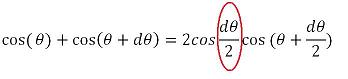

I started out in ChucK with sine oscillators to do some experimentation. Before I could go to far, I had to do some math:

I created a program in C that takes four arguments: an input file that has a musical score, the length of the output song in seconds, the number of cosines to add together for each note, and the output wav filename.

The format of the input files was as follows:

8

|

| G Arpeggio (10 sec) | arpeggio.txt | beatsynth arpeggio.txt 10.0 100 arpeggio.wav > arpeggio.freq | arpeggio.wav | 700 | arpeggio.freq |

| G Arpeggio (5 sec) | arpeggio.txt | beatsynth arpeggio.txt 5.0 100 arpeggio5.wav > arpeggio5.freq | arpeggio5.wav | 700 | arpeggio5.freq |

| G Arpeggio (2.5 sec) | arpeggio.txt | beatsynth arpeggio2.5.txt 2.5 100 arpeggio.wav > arpeggio2.5.freq | arpeggio2.5.wav | 700 | arpeggio2.5.freq |

| Happy Birthday (10 sec) | birthday.txt | beatsynth birthday.txt 10.0 60 birthday.wav > birthday.freq | birthday.wav | 480 | birthday.freq |

| "Wanna Be Startin Something" Michael Jackson My 66.6 second musical statement | wanna.txt | beatsynth wanna.txt 66.6 500 wanna.wav > wanna.freq | wanna.wav | 6000 | wanna.freq |

| midterm.ck | To test out basic concepts before moving onto more complicated code |

| midterm.c | A first (inefficient) implementation of the final product, to test synthesizing output based on a musical score |

| (*)midterm.cpp | A refined version of the program that merges identical frequencies into phasors for more efficient processing |

This design met the specifications for using beating cosine waves to have notes of precise frequency "pop out" at precise times during a song. What makes this method of composition so neat is that technically, all notes are being played all the time throughout the entire song; but as each note goes to be played, it pops out of the background at just the right time. This makes for a really cool, almost underwater effect. Because the attacks of the notes are not infinitely precise (it takes some time for the beats to rise to a peak), the music does sound rather blurry. Also, because all notes are in the background simultaneously, the music has an etheral, other-worldly timbre to it. What makes that even cooler is, as the note gets close to peaking, the listener can sort of anticipate it, because other beats that are created nearby begin to increase in amplitude (this is especially obvious when the piece is about to jump way up in pitch).

Since this was merely a demonstration of a concept, there are lots of extensions that could be made if this were to be refined as an actual composition tool. One of the first things I would do would be to extend the program to play chords. Nothing special should have to be done, other than allowing two+ notes to play at the same time (all of the math should be the same). Also, I came up with my own specification for creating musical scores. But it would be more natural to allow my program to read midi files, so that, in effect, my program turns into a midi synthesizer (especially with the note-on commands, it should be easy to figure out exactly when the attacks of each note are). In terms of perceptual quality, perhaps I would add the capability for users to specify harmonic patterns for each note, so that they could sound richer and perhaps even sound like actual instruments in a weird way. Lastly, I would create a basic pitch detection program that goes through (using the FFT, presumably) an input sound file and looks for 4-5 peaks in frequency every small increment in time, and that I could feed that information to my beat synthesizer (to resynthesize the most important notes from the input sound as beats).